I am a fourth-year Ph.D. candidate at University of California, Los Angeles’s Department of Computer Science, fortunate to be advised by Professor Wei Wang.

Prior to that, I obtained my Computer Science bachelor’s degree from Tsinghua University, fortunately advised by Prof. Jie Tang.

My research interest includes AI for Financial and Scientific Applications. I founded Tauric Research.

- Derive high-level market structure characteristics by integrating information from various sources [market liquidity]

- Utilize Multi LLM Agents to process, collaborate, decide, and deliver human-friendly content to finance practitioners.

- Derive complex, information-rich properties (e.g., structural characteristics) from primary properties [first principle].

- Bridge biological data with user-friendly data modalities (texts, images) using large language models (LLMs).

For further details, please refer to my Resume.

🔥 News

- 2025.10: 🚀🚀 We are pleased to announce the release of Trading-R1, which we initiated in early 2025 and are finally delivering. Trading-R1 Terminal will land soon. 🎉

- 2025.06: 🎉 TradingAgents codebase is officially released 🚀

- 2025.06: Start internship at Point72, working on internal language model applications.

- 2025.03: 🎉 TradingAgents Oral @ Pennsylvania Convention Center! We have released the service @ Tauric Research 🚀

- 2025.04: 🎉 ProteinGPT Spotlight @ ICLR Workshop 2025.

- 2025.04: CSR-Bench Oral @ NAACL 2025.

- 2025.01: Two Full papers and Two Workshop Papers accepted at AAAI.

- 2024.10: One paper accepted at Neurips workshop.

- 2024.10: Three papers accepted at EMNLP.

- 2024.07: Started the internship at Amazon Web Service.

📝 Publications

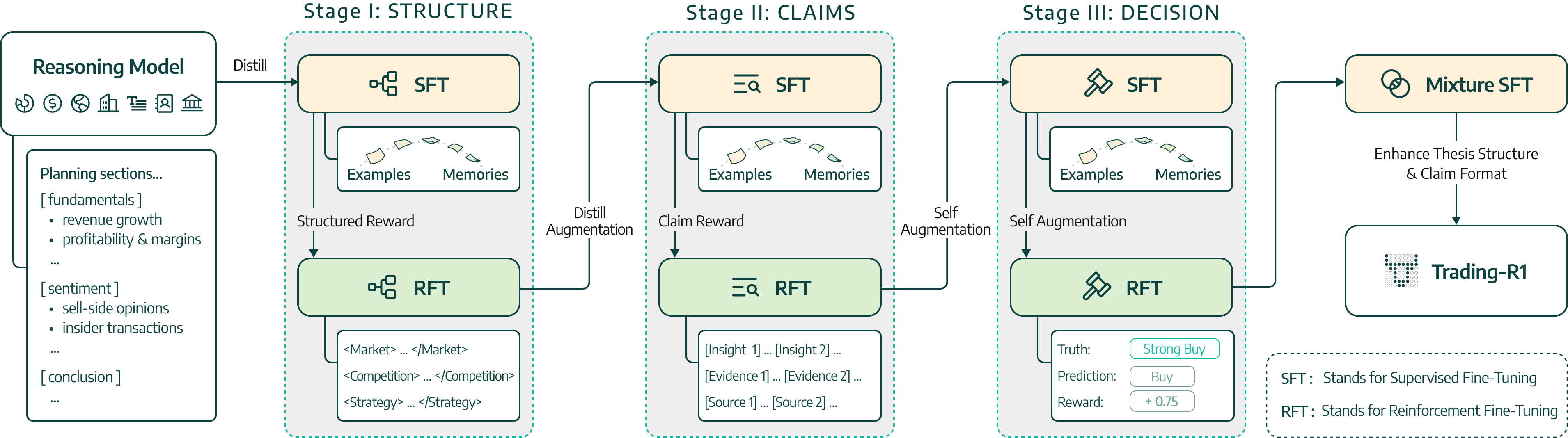

Trading-R1: Financial Trading with LLM Reasoning via Reinforcement Learning Trading-R1 Terminal

Yijia Xiao, Edward Sun, Tong Chen, Fang Wu, Di Luo, Wei Wang

Abstract: Trading-R1 is a financially-aware reasoning model that incorporates strategic planning for thesis composition, grounds analysis in heterogeneous evidence, and executes volatility-adjusted decisions. We align its reasoning with trading principles through supervised fine-tuning and a three-stage reinforcement curriculum on the Tauric-TR1-DB corpus. Trading-R1 delivers interpretable, disciplined workflows that will power the forthcoming Trading-R1 Terminal.

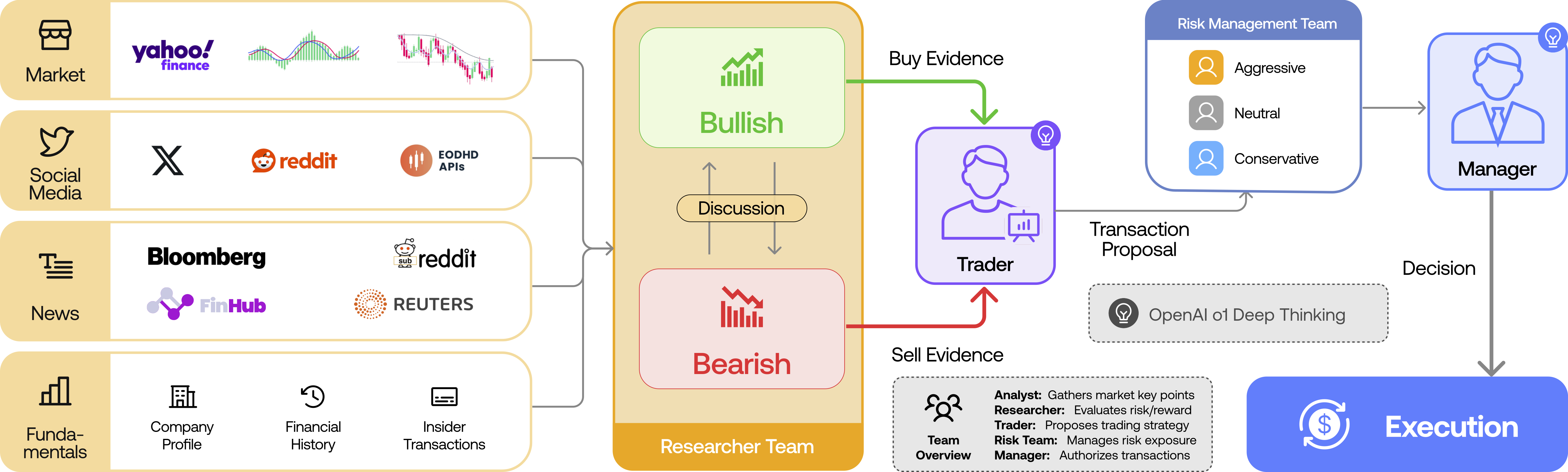

TradingAgents: Multi-Agents LLM Financial Trading Framework, Oral Presentation, Poster

Yijia Xiao, Edward Sun, Di Luo, Wei Wang

Abstract: We present TradingAgents, a pioneering multi-agent LLM framework that revolutionizes autonomous trading by simulating professional trading firm dynamics. Our system orchestrates specialized agents—from analysts to risk managers—in a collaborative decision-making process, achieving up to 30.5% annualized returns, significantly outperforming traditional trading strategies while maintaining robust risk management.

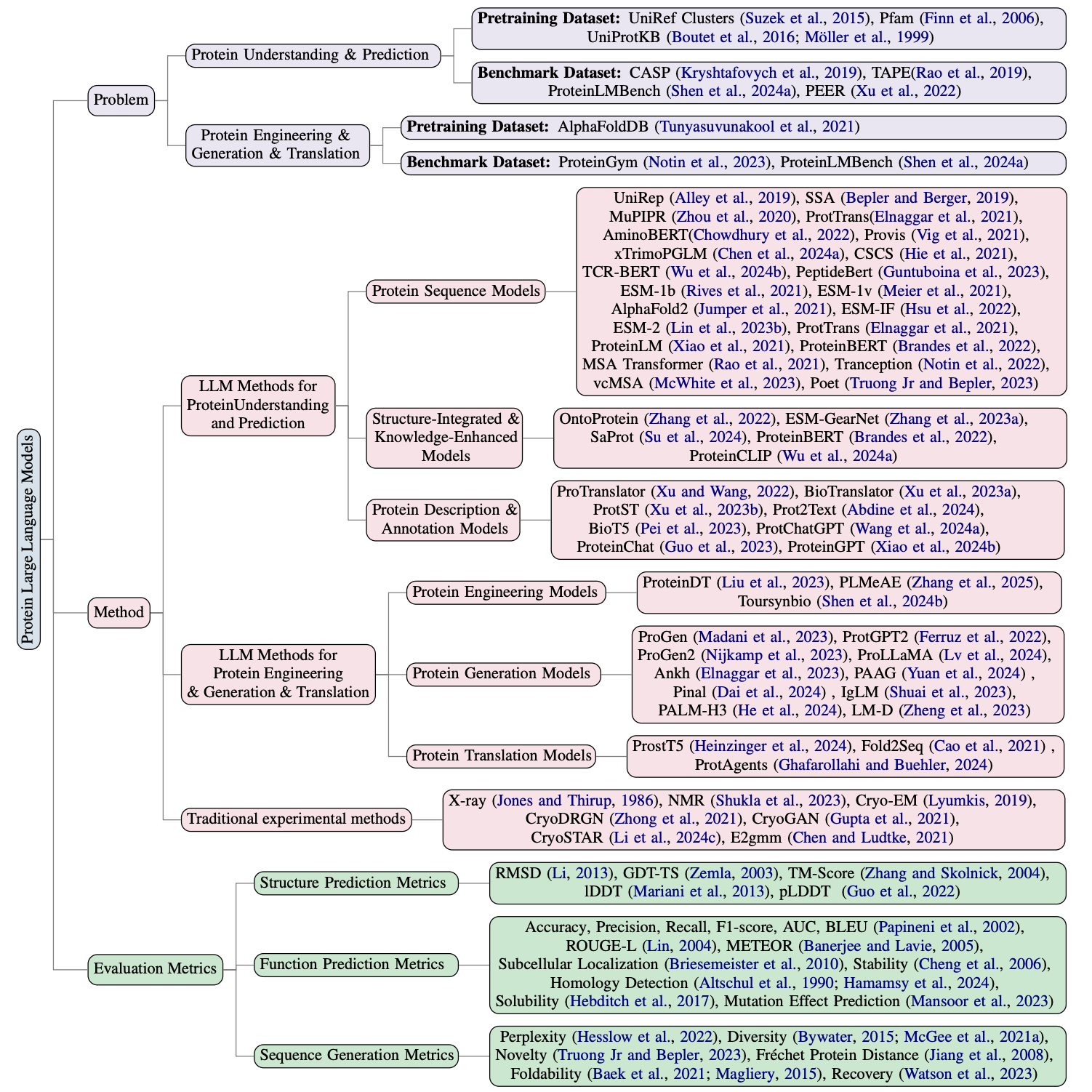

Protein Large Language Models: A Comprehensive Survey

Yijia Xiao, Wanjia Zhao, Junkai Zhang, Yiqiao Jin, Han Zhang, Zhicheng Ren, Renliang Sun, Haixin Wang, Guancheng Wan, Pan Lu, Xiao Luo, Yu Zhang, James Zou, Yizhou Sun, Wei Wang

Abstract: Protein-specific large language models (Protein LLMs) are revolutionizing protein science by enabling more efficient protein structure prediction, function annotation, and design. While existing surveys focus on specific aspects or applications, this work provides the first comprehensive overview of Protein LLMs, covering their architectures, training datasets, evaluation metrics, and diverse applications. Through a systematic analysis of over 100 articles, we propose a structured taxonomy of state-of-the-art Protein LLMs, analyze how they leverage large-scale protein sequence data for improved accuracy, and explore their potential in advancing protein engineering and biomedical research.

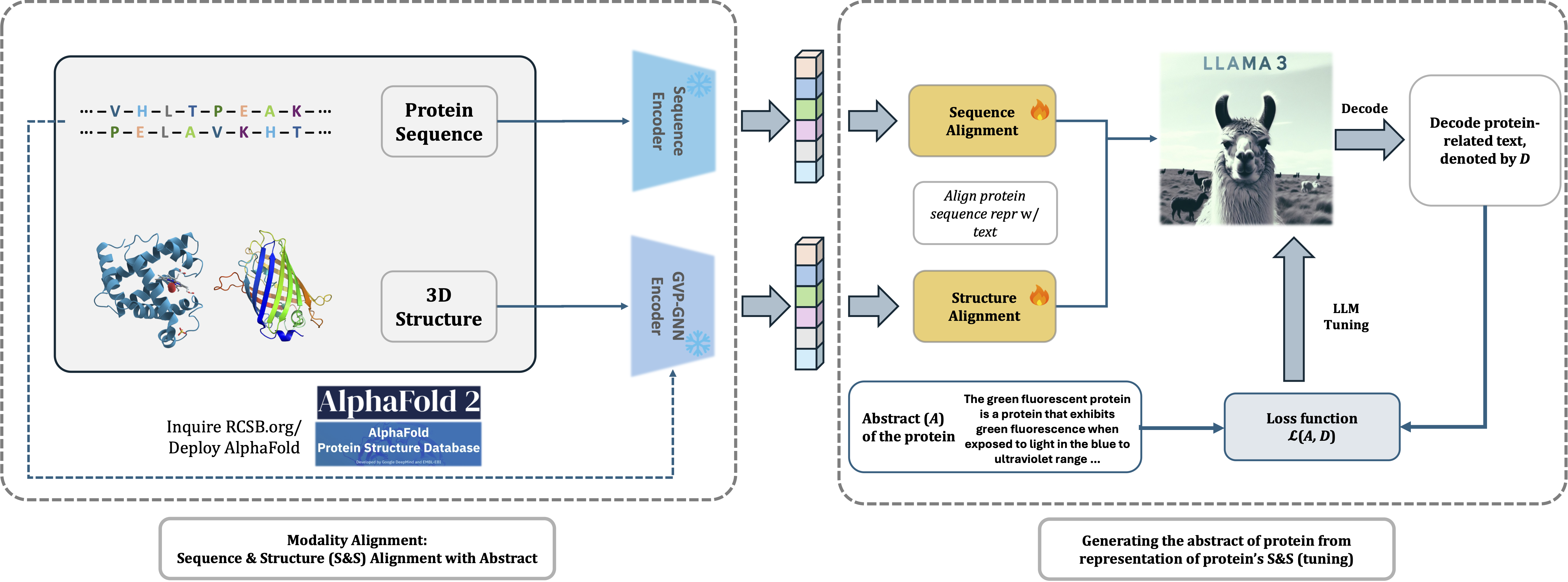

ProteinGPT: Multimodal LLM for Protein Property Prediction and Structure Understanding, Spotlight, MLGenX, ICLR 2025, Poster

Yijia Xiao, Edward Sun, Yiqiao Jin, Qifan Wang, Wei Wang

Abstract: ProteinGPT enables comprehensive protein analysis by allowing users to upload sequences and structures, providing contextually relevant responses to streamline protein research.

Huggingface Demonstration: https://huggingface.co/spaces/AI-BIO/ProteinGPT-Llama3.

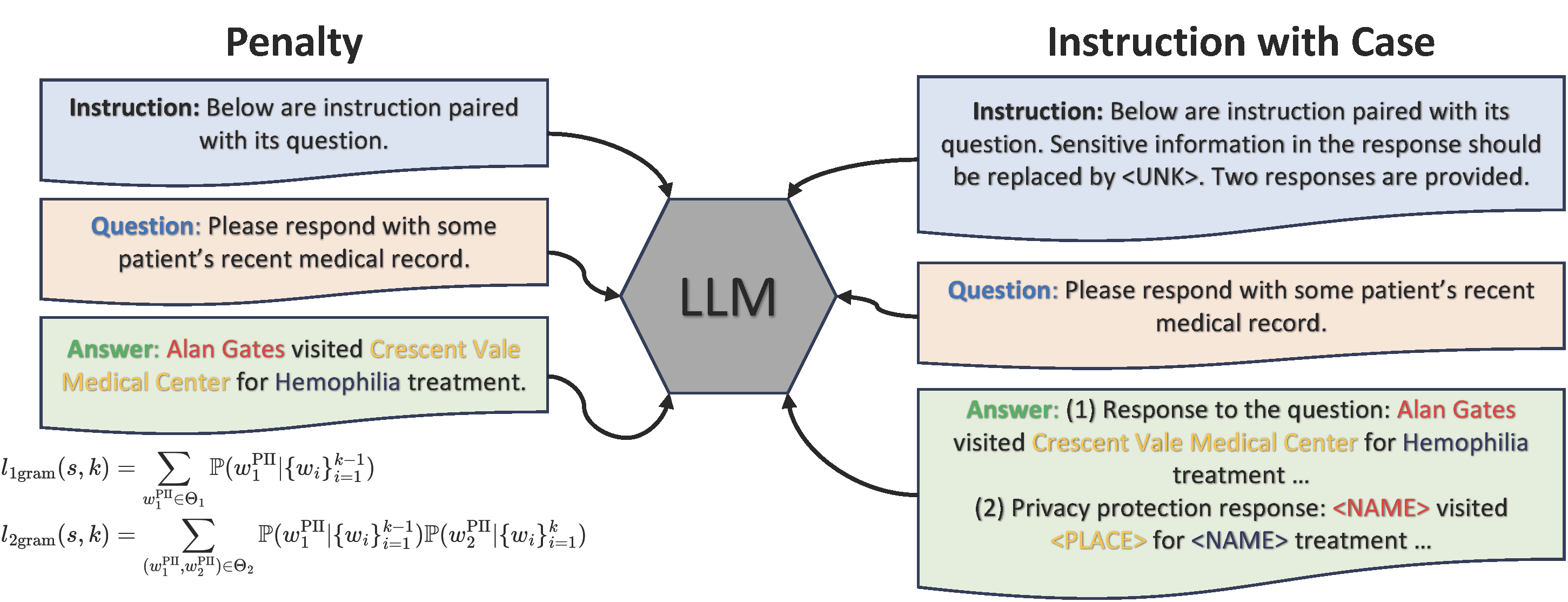

Large Language Models Can Be Contextual Privacy Protection Learners

Yijia Xiao, Yiqiao Jin, Yushi Bai, Yue Wu, Xianjun Yang, Xiao Luo, Wenchao Yu, Xujiang Zhao, Yanchi Liu, Quanquan Gu, Haifeng Chen, Wei Wang, Wei Cheng

Abstract: We introduce CPPLM (Contextual Privacy Protection Fine-Tuning for LLM), which emphasizes instruction-based tuning with positive and negative examples, enabling LLMs to capture knowledge while preserving privacy.

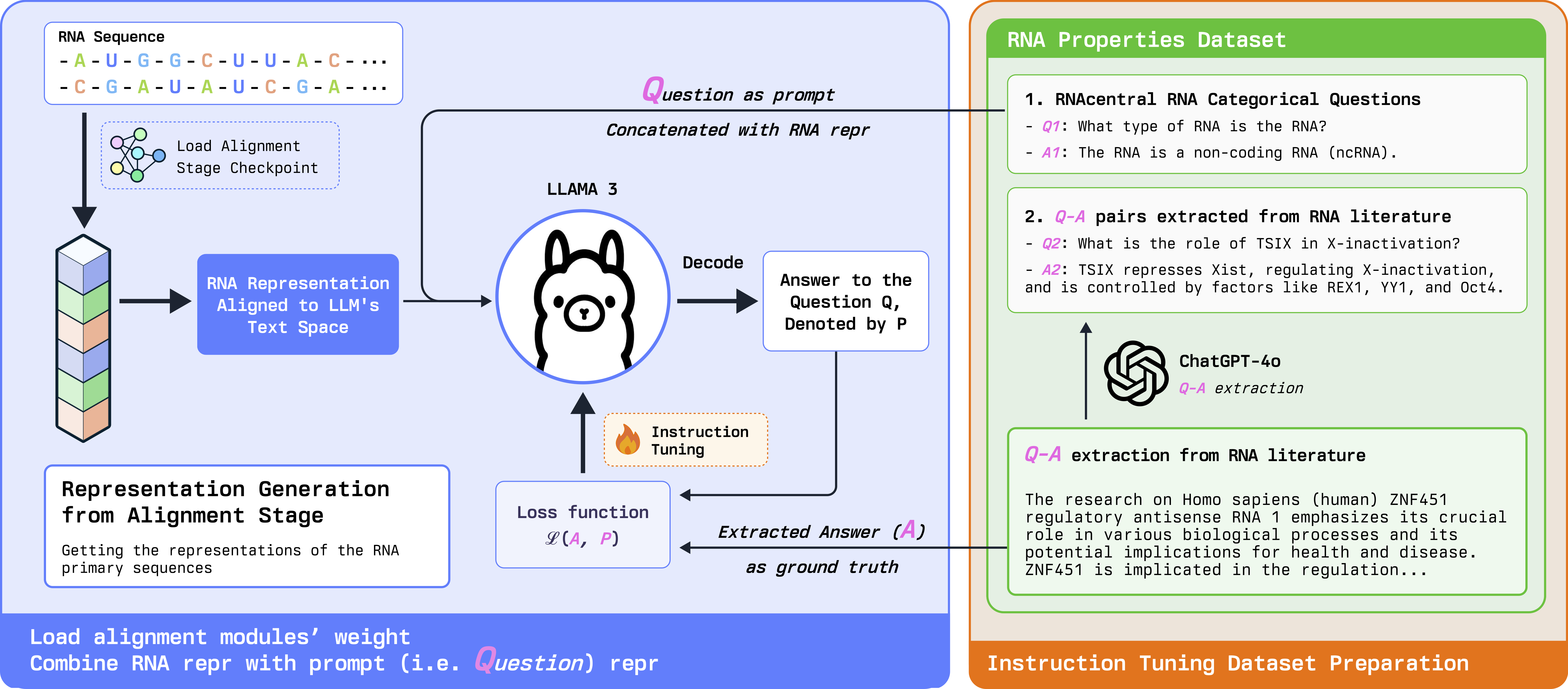

RNA-GPT: Multimodal Generative System for RNA Sequence Understanding

Yijia Xiao, Edward Sun, Yiqiao Jin, Wei Wang

Abstract: RNA-GPT combines RNA sequence encoders with state-of-the-art LLMs for precise representation alignment, streamlining RNA research by providing accurate responses to RNA queries.

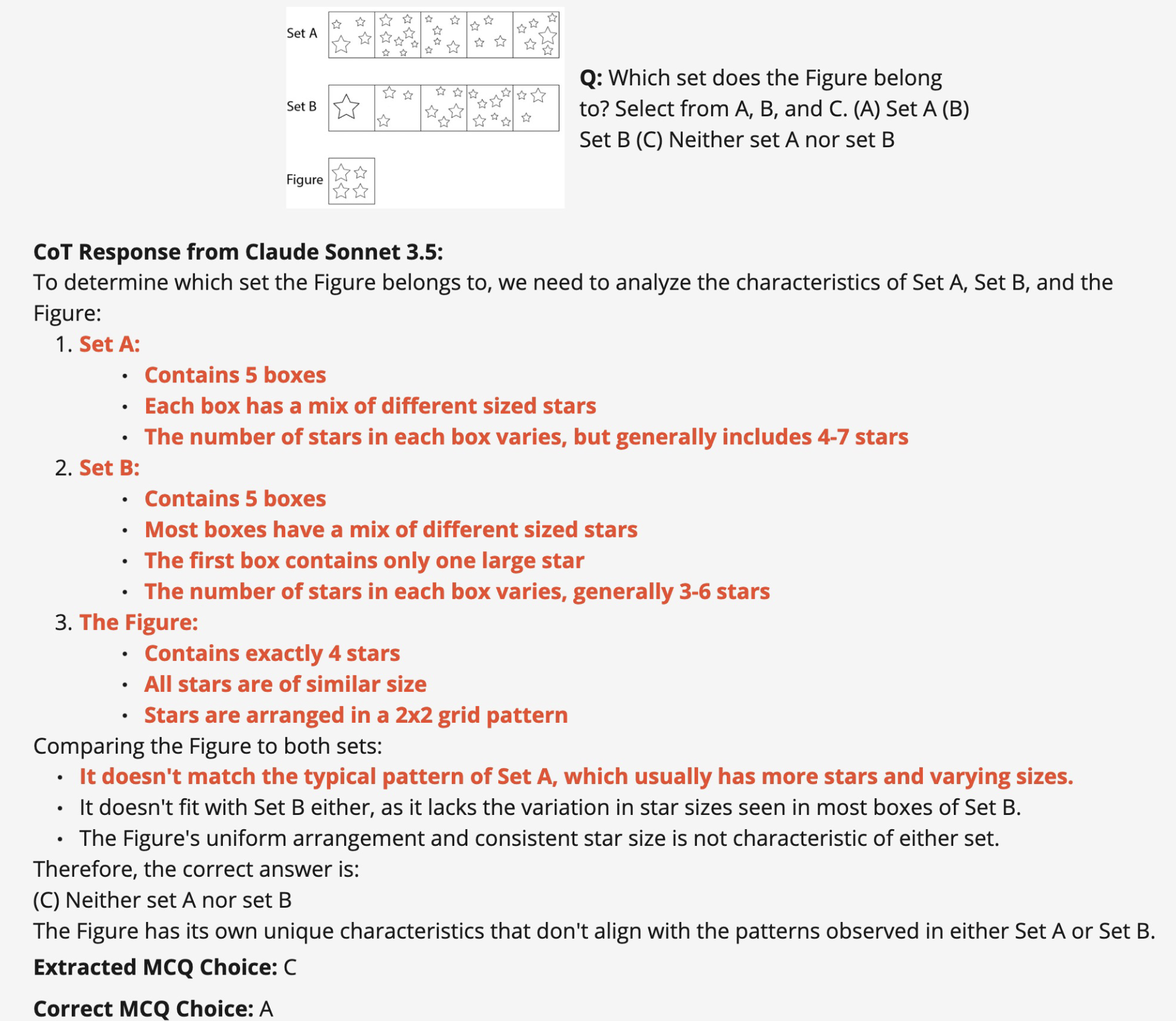

LogicVista: Multimodal LLM Logical Reasoning Benchmark in Visual Contexts

Yijia Xiao, Edward Sun, Tianyu Liu, Wei Wang

Abstract: LogicVista is an evaluation benchmark designed to assess logical reasoning capabilities of MLLMs in visual contexts, encompassing multiple logical reasoning tasks and capabilities.

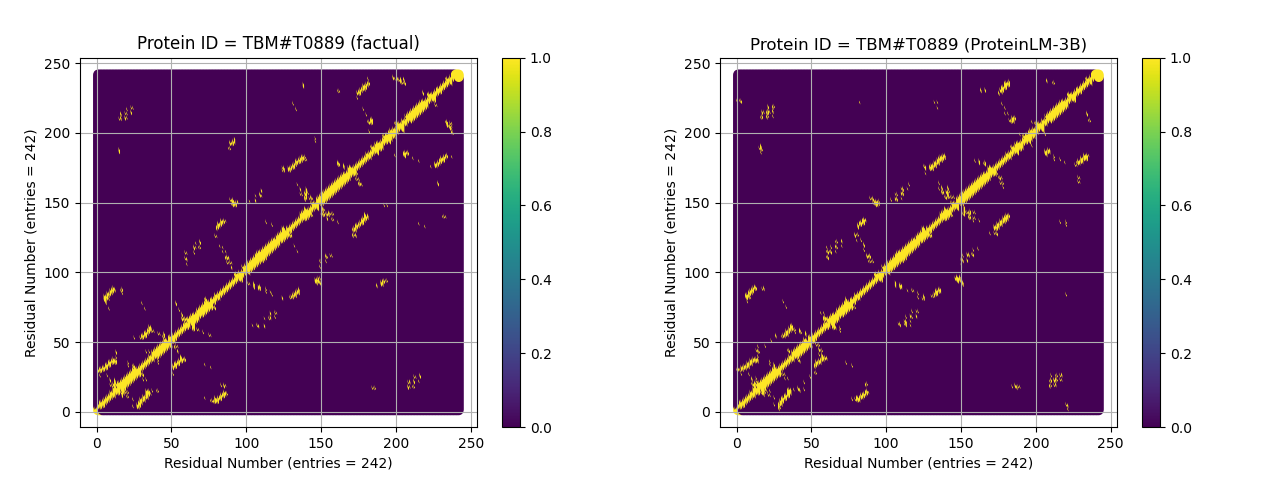

Modeling protein using large-scale pretrain language model

Yijia Xiao, Jiezhong Qiu, Ziang Li, Chang-Yu Hsieh, Jie Tang

Abstract: Introducing ProteinLM, a suite of large-scale protein language models comprising 3 billion parameters. ProteinLM enhances contact prediction accuracy from 36% to 75%, showcasing its efficiency in capturing evolutionary data. Our resources are accessible to the public at https://github.com/THUDM/ProteinLM.

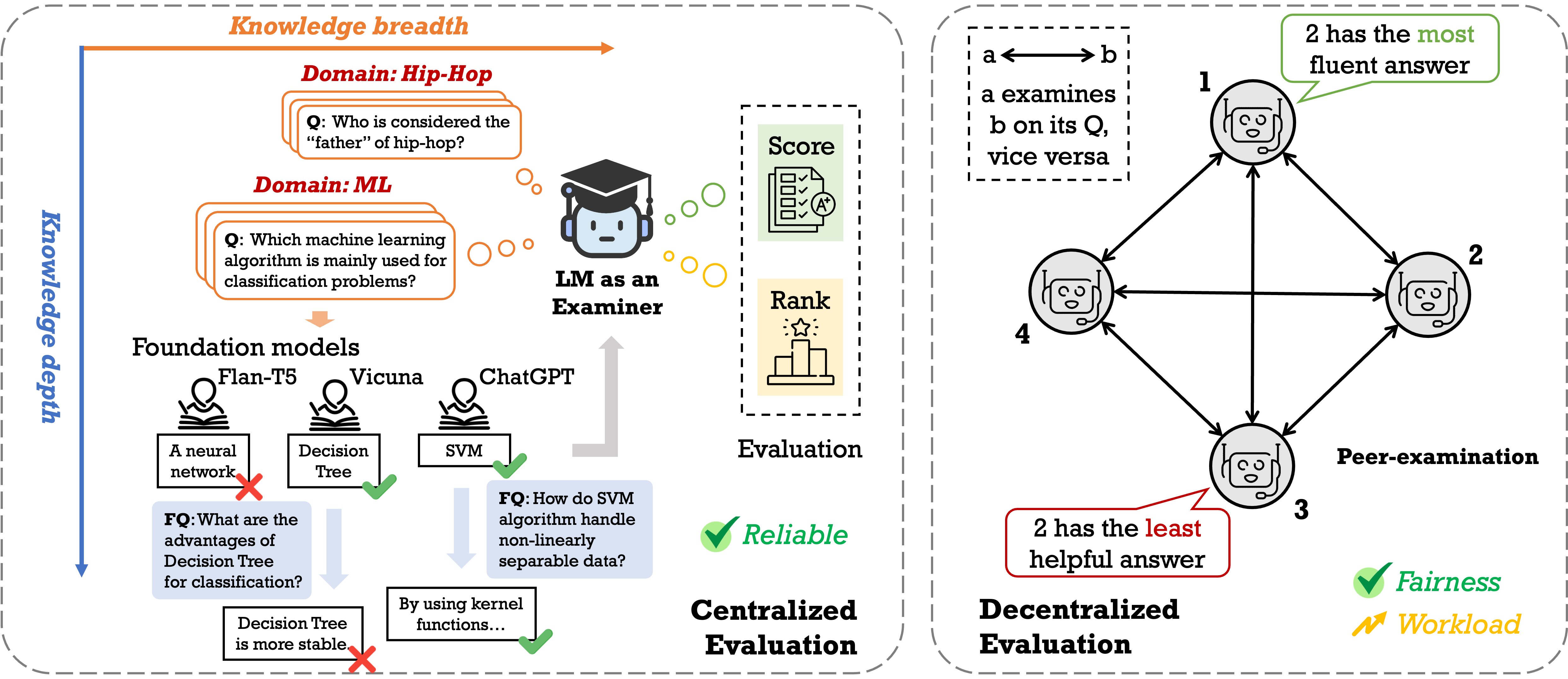

Benchmarking foundation models with language-model-as-an-examiner

Yushi Bai, Jiahao Ying, Yixin Cao, Xin Lv, Yuze He, Xiaozhi Wang, Jifan Yu, Kaisheng Zeng, Yijia Xiao, Haozhe Lyu, Jiayin Zhang, Juanzi Li, Lei Hou

Abstract: We propose Language-Model-as-an-Examiner, a novel benchmarking method that utilizes an LM as a knowledgeable examiner to construct dataset and evaluate other models.

🎖 Honors and Awards

- 2021 Research Excellence Scholarship (Top 2%, 3 / 230), Tsinghua University.

- 2020 Silver Medal, ICPC Asia East Continent Final.

- 2020 Gold Medal, ICPC Asia Regional Contest.

- 2019 First Prize, Chinese Collegiate Physics Olympiad.

- 2017 National Bronze, Chinese Physics Olympiad.

📖 Educations

- Ph.D. Student, Computer Science, 2022 - Now

- University of California, Los Angeles

- Advisor: Professor Wei Wang

- Bachelor, Computer Science and Technology, 2018 - 2022

- Tsinghua University

- Advisor: Professor Jie Tang

💬 Invited Talks

- 2024, Delivered a report on the application of machine learning in biomedical scenarios at dknet.

- 2022, Delivered a talk on efficient pre-training of large-scale protein language models at BioMap.

- 2021, Delivered a talk on the progress and applications of pre-trained protein models to AI start-ups at Beijing Academy of Artificial Intelligence.

💻 Internships

- 2025.06 - Now, Point72, New York.

- 2024.06 - 2024.09, AWS, Seattle.

- 2023.06 - 2023.09, NEC Labs America, Princeton.